AI’s $200B Question

GPU capacity is getting overbuilt. Long-term, this is good. Short-term, things could get messy.

The Generative AI wave, which began last summer, has gone into hyperspeed. The catalyst for this double acceleration was Nvidia’s Q2 earnings guide and subsequent beat. This signaled to the market an insatiable level of demand for GPUs and AI model training.

Before Nvidia’s announcement, consumer launches like ChatGPT, Midjourney and Stable Diffusion had raised AI into the public consciousness. With Nvidia’s results, founders and investors were delivered empirical evidence that AI can generate billions of dollars of net new revenue. This has shifted the category into its highest gear yet.

While investors have extrapolated much from Nvidia’s results—and AI investments are now happening at a torrid pace and at record valuations—a big open question remains: What are all these GPUs being used for? Who is the customer’s customer? How much value needs to be generated for this rapid rate of investment to pay off?

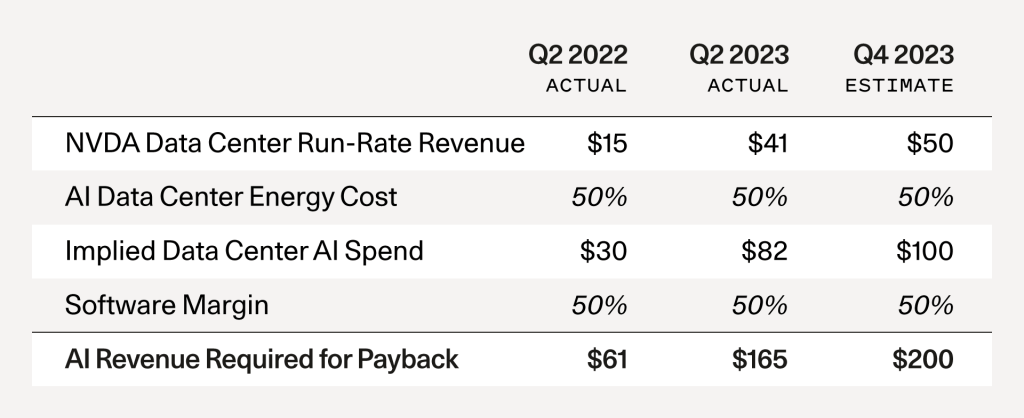

Consider the following: For every $1 spent on a GPU, roughly $1 needs to be spent on energy costs to run the GPU in a data center. So if Nvidia sells $50B in run-rate GPU revenue by the end of the year (a conservative estimate based on analyst forecasts), that implies approximately $100B in data center expenditures. The end user of the GPU—for example, Starbucks, X, Tesla, Github Copilot or a new startup—needs to earn a margin too. Let’s assume they need to earn a 50% margin. This implies that for each year of current GPU CapEx, $200B of lifetime revenue would need to be generated by these GPUs to pay back the upfront capital investment. This does not include any margin for the cloud vendors—for them to earn a positive return, the total revenue requirement would be even higher.

Based on public filings, much of the incremental data center build-out is coming from big tech companies: Google, Microsoft and Meta, for example, have reported increased data center CapEx. Reports indicate that Bytedance, Tencent and Alibaba are big Nvidia customers as well. On a go-forward basis, companies like Amazon, Oracle, Apple, Tesla and Coreweave should also be important contributors.

The important question to be asking is: How much of this CapEx build out is linked to true end-customer demand, and how much of it is being built in anticipation of future end-customer demand? This is the $200B question.

The Information has reported that OpenAI is generating $1B in annual revenue. Microsoft has said it expects to generate $10B in AI revenue from products like Microsoft Copilot. Let’s assume Google will generate a similar amount of revenue from its AI products, such as Duet and Bard. Let’s also assume Meta and Apple each generate $10B in revenues from AI. Let’s use a $5B placeholder for Oracle, Bytedance, Alibaba, Tencent, X and Tesla. These are all dummy assumptions—the point is that even if you assume extremely generous gains from AI, there’s a $125B+ hole that needs to be filled for each year of CapEx at today’s levels.

There is a large opportunity for the startup ecosystem to fill this hole. Our goal is to “follow the GPUs” and find the next generation of startups that leverage AI technology to create real end-customer value. We want to invest in these companies.

The goal of this analysis is to highlight the gap that we see today. AI hype has finally caught up to the deep learning technology breakthroughs in development since 2017. This is good news. A major CapEx buildout is happening. This should bring AI development costs down dramatically over the long-term. You used to have to buy a server rack to build any application. Now you can use the public clouds at a much lower cost. Likewise, today many AI companies are using a large portion of their venture capital on GPUs. As today’s supply constraints give way to a supply glut, the cost of running AI workloads will come down. This should spur more product development. It should also attract more founders to build in this space.

During historical technology cycles, overbuilding of infrastructure has often incinerated capital, while at the same time unleashing future innovation by bringing down the marginal cost of new product development. We expect this pattern will repeat itself in AI.

For startups, the takeaway is clear: As a community, we need to shift our thinking away from infrastructure and towards end-customer value. Happy customers are a fundamental requirement of every great business. For AI to have an impact, we need to figure out how to leverage this new technology to make people’s lives better. How can we translate these amazing innovations into products that customers use every day, love and are willing to pay for?

The AI infrastructure build out is happening. Infrastructure is not the problem anymore. Many foundation models are being developed—this is not the problem anymore, either. And the tooling in AI is pretty good today. So the $200B question is: What are you going to use all this infrastructure to do? How is it going to change people’s lives?

If you are building in this space, we’d love to hear from you. Please reach out at dcahn@sequoiacap.com