Exploring Autonomous Agents: A Semi-Technical Dive

Agents are all the rage, but their planning capabilities currently outpace their ability to act reliably. How do we make them truly autonomous?

Over the past several weeks, autonomous AI agents have taken the world by storm. AutoGPT is one of the fastest growing Github repos in history, rocketing past PyTorch, every major Python web framework, and Python itself (sorry Guido) in number of stars. It’s led to some pretty sweet—albeit cherry-picked— demos and has captured the imagination of Twitter thought leaders and AI doomers alike. In a weird twist of fate, the popularity of agents has probably accelerated AI doomerism more than it has accelerated progress towards the superintelligence doomers fear.

An agent’s primary distinction from LLMs is that they run in a self-directed loop, largely augmented by a lightweight prompting layer and some kind of persistence or memory. The architecture varies from agent to agent, with some focused on task prioritization and others taking a more conversational roleplaying approach. The use cases are far reaching, from personal assistants to automated GTM teams. If you’re looking for an excellent primer and thesis on agents, look no further than my colleague Lauren Reeder’s post. And if you’re looking for the cutting edge for this family of agents, there’s LangChain’s recent post on Plan-and-Execute Agents.

Pretty exciting, but how far are we from this reality? In order to truly understand, I am a strong believer in getting to the nuts and bolts. This means getting your hands dirty with the code. So I sat down for an afternoon and took a quick look at the codebases for some popular agents. Here are some observations.

Implementation

The core autonomous agent loops are pretty straightforward. For instance, for AutoGPT, each agent has access to an initial prompt, a set of actions to execute, a history of messages, and a workspace where it can write executable code and files on the fly. An initial prompt might be something like “plan my daughter’s birthday—she really likes unicorns.” The agent then runs on a modified ReAct loop and is able to critique its own action through a second chat completion call with a special prompt that roughly boils down to: “review the proposed action and tell me whether it’s good or bad and why.” The agent calls the OpenAI chat completion endpoint with the following context: the original directive describing the goal of the agent (“plan a sweet unicorn-themed party”), some commands that represent procedural code the agent can run, as well as some short-term memory (the historical context of the agent’s prior reasoning and actions, up to the token limit). The text completion is formatted to a JSON dict of “thoughts” reasoning through the next action to take as well as the next selected action—potentially through another chat completion call. The agent pauses after each loop and waits for user input, which will be included in future context. The context and history can be persisted in storage: Pinecone, Redis, or more recently, Milvus or Weaviate—but honestly a JSON dump works just fine.

Some immediate observations: Although the demos can be astounding, agent implementations are pretty straightforward under the hood. AutoGPT is in essence a light prompting layer running on a recursive loop with persistent memory and which can write executable code on the fly. LangChain has a partial implementation of AutoGPT where they augmented their base agent with the optional human feedback component. It’s important to note: LangChain is a framework that allows for the implementation of various agents, including not just AutoGPT, but also BabyAGI and direct translations of existing research (e.g., ReAct, MRKL – here). AutoGPT is an agent implementation that has made specific decisions on overall architecture and prompting strategy.

def generate_prompt_string(self) -> str:

"""Generate a prompt string.

Returns:

str: The generated prompt string.

"""

formatted_response_format = json.dumps(self.response_format, indent=4)

prompt_string = (

f"Constraints:n{self._generate_numbered_list(self.constraints)}nn"

f"Commands:n"

f"{self._generate_numbered_list(self.commands, item_type='command')}nn"

f"Resources:n{self._generate_numbered_list(self.resources)}nn"

f"Performance Evaluation:n"

f"{self._generate_numbered_list(self.performance_evaluation)}nn"

f"You should only respond in JSON format as described below "

f"nResponse Format: n{formatted_response_format} "

f"nEnsure the response can be parsed by Python json.loads"

)

return prompt_string

// Prompting strategy in the LangChain AutoGPT implementationAn immediately achievable next step to making these agents useful in practice is expanding their action space: LangChain tools or AutoGPT plugins. These modules define the extended set of commands that an agent can perform. Examples include searching Google or writing some code on the fly. The open source community might expand this finite set of actions with, for instance, Twitter integrations to let the agent read and post tweets, or payments integrations to navigate checkout flows. This is where LangChain and AutoGPT excel: due to recent attention, developers flock to these projects to build plugins. It’s a really interesting moat where, similar to proof-of-work consensus in blockchains, developers are incentivized to build on the most complete plugin ecosystem—the longest chain, so to speak. The hard part is that the set of actions a user can take on the internet is near infinite. To get agents that can do everything humans can do online the long term solution is to have agents reliably write their own procedural code to gracefully handle novel cases, but we need a step function improvement in models before this future is within reach.

Currently, agents run like your run-of-the-mill MBA graduate or entry level consultant: they are very good at describing plausible solutions but very poor at executing on them. Put another way, the Act component of ReAct performs poorly in unconstrained environments (ReAct is constrained to a predefined action space), and it is clear that the agent isn’t able to reason at a deeper level about novel situations or problem solve on the fly. A concrete example of this is that agents often hit a wall when the output of an action isn’t what they expect: they try to pull up a tweet, hit a 403: Unauthorized error, and are not quite sure what to do next as they have no notion that calling Twitter might give a different response if the user is not logged in. Practically speaking, the current generation of agents still need quite a bit of human intervention and direction to be effective.

So what’s next?

Building a working autonomous agent in an unconstrained environment is an open research problem. We are still far from this reality. That said, models are improving at an accelerating clip. AutoGPT-like agents represent an interesting practical experiment on agents in an unconstrained environment. Through experimentation and iteration on architecture and prompting strategy and enough open source contributions in the action space (plugins), this approach could plausibly start to bridge the gap between AI doomer fantasy and reality. Here is my agent wishlist to try to get us there:

- A better headless browser. The kinds of agent directives that work best are the ones that involve researching things on the internet. This works well when the agent starts with a Google search. It works less well when the agent then visits an arbitrary web page. Not only do agents need to understand and gracefully handle various HTTP errors, but also parse an arbitrarily large webpage and potentially execute Javascript to fully mimic a human user experience. Then, the agent would have to map the perceived UX to a limited context window. This is a very hard problem, but if you have a potential solution, here are some places to start.

- Better command selection. Currently, chat completion is responsible for command selection (“determine which command to use…”). This leads to some pretty crazy hallucinations: for instance, the birthday planning agent shown below tries to reference as an argument a file that doesn’t actually exist. There is probably some clever combination of prompting and procedural code that can minimize hallucinations and help guide the agent in the right direction. For instance, you could try cosine similarity between the embedding vectors across more descriptive versions of current commands and the chat completion response. Or you could try to craft the prompt in a way to minimize edge cases or restrict the creativity of completion outputs. It’s unclear what the right solution is, but there is a lot of room for improvement here. As an aside, this a great opportunity to explore toolformers at the model level.

{

...

"speak": "Let's start by analyzing any previous conversations or notes we have about the daughter's interests and preferences for her birthday party. This will help us come up with creative and unique ideas for themes, decorations, activities, and entertainment that align with her incentives and preferences. Then we can move on to managing the logistics of the party, including venue selection, guest list management, catering, and budgeting. Finally, we should anticipate and address potential issues or challenges that may arise during the planning and execution of the party to minimize stress and maximize satisfaction."

},

"command": {

"name": "read_file",

"args": { "filename": "daughter_preferences.txt" }

}

// Example hallucination (no such file exists!)

- Optimizations around the action space. While the LangChain agent is parameterized by default (you decide which tools to pass on instantiation), AutoGPT defaults to a set of out of the box actions. Some of these actions give agents a ton of functionality: they can read, write and execute arbitrary code and files. In the best case, this will make agents truly autonomous and self-improving. However, agents are not yet able to generate code and files in productive ways more reliably than they are to fail or hallucinate in doing so. This leads agents to get stuck more often than it leads to novel and working outcomes. The suggestion here is threefold: 1) do a bit of “hyperparameter optimization” around which subset of actions lead to the best agents—and for LangChain, include this as the default set of tools; 2) allow agents to change the action set on the fly, either automatically once they have hit an edge case and run into a loop (should be easy to detect) or manually on user input; 3) build out guardrails, input validation, and better failure mode handling for existing actions (similar to the suggestion for a better headless browser). Also, for AutoGPT, potentially remove some of the more powerful “out there” commands until the models get better.

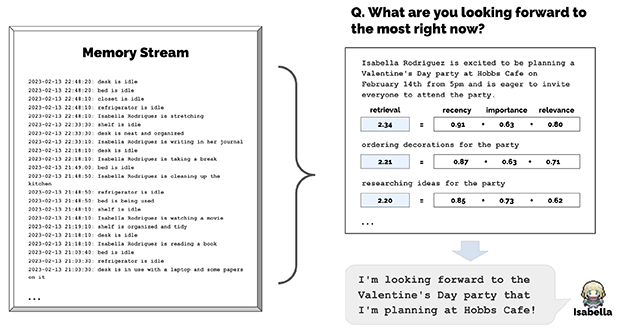

- A discriminator to rank actions. The recent Generative Agents paper described a strategy that ranked observations in memory by some linear combination of “recency,” “importance,” and “relevance.” We could try implementing something like this for both memory context and task selection/action prioritization. The devil is in the details. For instance, the paper suggested a separate prompt to rank importance (“on a scale of 1 to 10…”); however, depending on the use case, “importance” could be better estimated by a numerical model (e.g. likelihood estimator) depending on the objective. There is likely no one size fits all solution, and the best approach is likely to start with a simple prompt and go from there.

- More tools and plugins. Over the past two weeks, the number of approved plugins on AutoGPT has ballooned from 2 to almost 20—from Telegram to Wikipedia to crypto. LangChain tools are just as comprehensive. Each additional working plugin expands an agent’s action space and gets it that much closer to mimicking a human on the internet.

- Agent-to-agent messaging. As agents are deployed in the wild, they can start communicating with each other in novel ways. These ideas are explored in multi-agent simulation environments, but AutoGPT and other unconstrained agents currently operate in single player mode. Messaging could introduce the possibility of more complex relationships between agents with different directives, such as student-coach or competitive peer-peer. This would require a persistence layer for agents, the ability for agents to query all other agents, and a messaging layer, potentially with a discriminator to rank messages by importance that are received between each iteration of the agent (h/t @nicktindle for this observation).

- Better models. This is a must-have for the frequent gaps in logic the agent exhibits. We don’t quite get there with GPT-4—maybe the next generation of models? In addition, current autonomous agents are slow and expensive. Perhaps it is worth exploring fine-tuning on agent trajectories to make them faster, cheaper and effective for a subset of tasks. Toolformers remain underexplored but promising.

If there is one takeaway from all of this, agents’ reasoning ability is pretty good but their action-taking aspect is still pretty rudimentary. In research, agents largely run in constrained environments with limited abilities to act, like toddlers playing with toy cars in a sandbox. With autonomous commercial agents, we are seeing the first experimental attempts to have agents run in the wild unconstrained. We are giving these toddlers actual cars, so to speak. This is exciting and unprecedented, and would represent a zero-to-one improvement in agents once they work reliably. However, these toddlers don’t know how to drive and are currently crashing into most obstacles that come their way. Do we replace the cars with Tonkas or do we hope that the toddlers grow up? I have made some suggestions on the toy car side in the hopes of making some surprisingly competent toddlers, but if we want truly working autonomous agents, we also need to wait for better models.

Thanks to my colleagues at Sequoia, Lauren Reeder and Charlie Curnin, and to Ankush Gola from LangChain and Michael Graczyk for their thoughtful review and helpful suggestions for this post.